Todays "Vibe" 😉 Coding Session

Today I want to share my experience using multiple AI tools together: CodeGiant with Gemini 2.5 Pro, Cursor with Claude 3.7 Thinking Max, and myself as the orchestrator. This approach creates what I call a "Vibe" - the vibe of team-work and the power of context.

First I am vibing myself into the context

I start by collecting the most relevant context about what I want to adapt in my codebase into a markdown file as a TASK-Description. This helps me clarify my own thoughts and creates a clear specification for my AI assistants.

Then I let my assistants vibe themselves into it as well

My workflow involves multiple AI tools working together:

-

CodeGiant + Gemini 2.5 Pro: CodeGiant collects comprehensive codebase information and writes it into another markdown file. It then hands this file, along with my TASK-Description, over to Gemini 2.5 Pro to make a proposal.

-

Cursor + Claude 3.7 Thinking Max: I also ask Cursor using Claude 3.7 Thinking Max to make a proposal based on the TASK-Description and whatever it gathers from the codebase in Agent-Mode.

Group vibing on the way

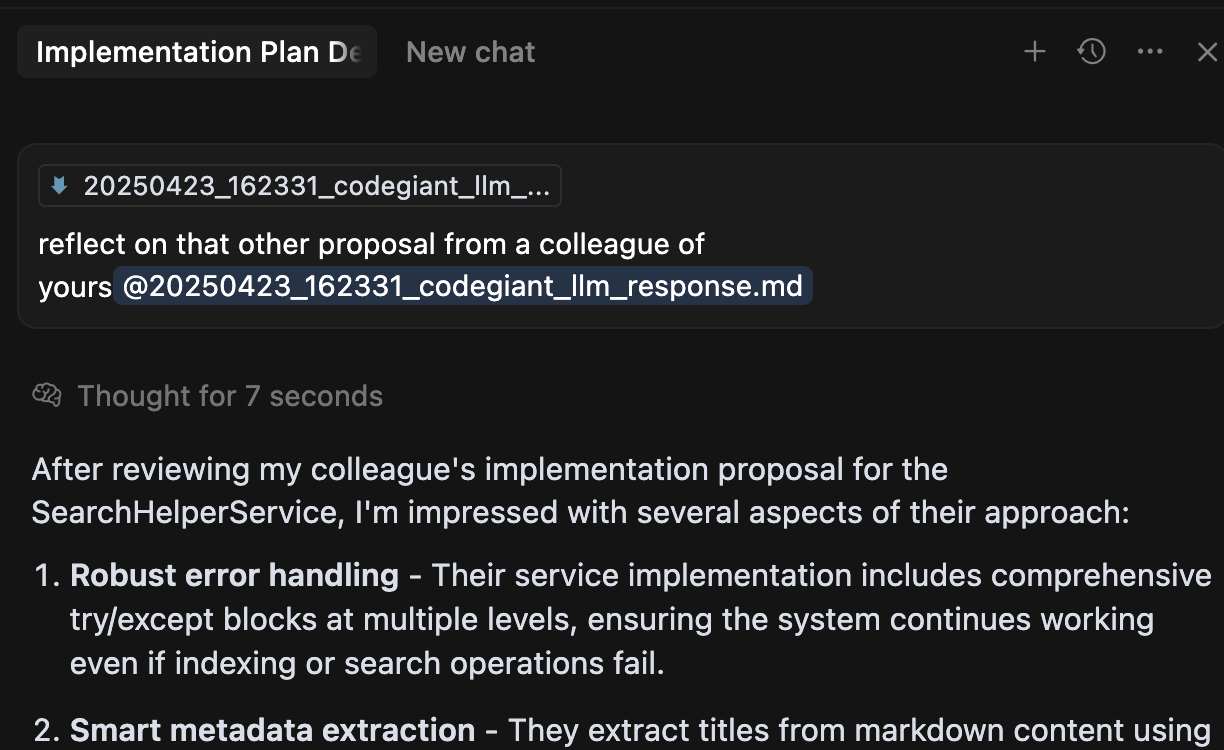

Here's where it gets interesting - I ask Claude 3.7 Thinking Max to review the proposal from Gemini 2.5 Pro, treating it like feedback from a colleague.

Typically, Claude confirms that both AIs are on the same page, but often notes that the CodeGiant + Gemini 2.5 Pro combination produced a more complete solution. This isn't surprising since CodeGiant provides the entire codebase context, while Cursor/Claude search for what they think is relevant.

These reviews give me a good sense of whether things are on track or if clarification is needed. Today's review was particularly insightful: "After reviewing my colleague's implementation proposal for the SearchHelperService, I'm impressed with several aspects of their approach: ..."

See this one:

Vibing over to implementation

The final step is asking Claude 3.7 Thinking Max to create the best solution by combining both approaches and starting implementation.

My rules for the Agent include running tools to check code quality, with issues being fixed before presenting the solution to me. The Agent also finds smart ways to test parts of the code itself, usually resulting in well-polished solutions. As I like to say, I don't like my code rare! 😉

Benefits of this "Team Vibe" approach

This collaborative AI workflow offers several advantages:

- Comprehensive context: Different tools bring different strengths to the table

- Multiple perspectives: Getting different approaches to the same problem

- Peer review-like feedback: AIs reviewing each other's work catches potential issues

- Quality assurance: Automated code quality checks and testing before I see the solution

- Better final product: The combined intelligence produces more robust solutions

Conclusion

By treating AI assistants as team members with different strengths and creating a workflow that leverages those strengths, I've found a powerful approach to coding that produces better results with less effort. The "Team Vibe" of collaborative context-sharing between multiple AI systems and myself as the human orchestrator creates a sum greater than its parts.

What's your experience with using multiple AI tools together? Have you tried creating your own "Team Vibe"? I'd love to hear about your approaches in the comments.