Test Scenarios Before Release

AI-Chatbots are everywhere currently. They are used in in customer service and support, in sales, in marketing, ...

In the end these systems represent your company when facing to the public. So they stand for your company's values, your company's culture and how professional your company approaches its duties. Not only in terms of how professional you set up your AI-Powered Software, but also in terms of how professional you work in general.

How to not do it

An example of how not to do it is the following:

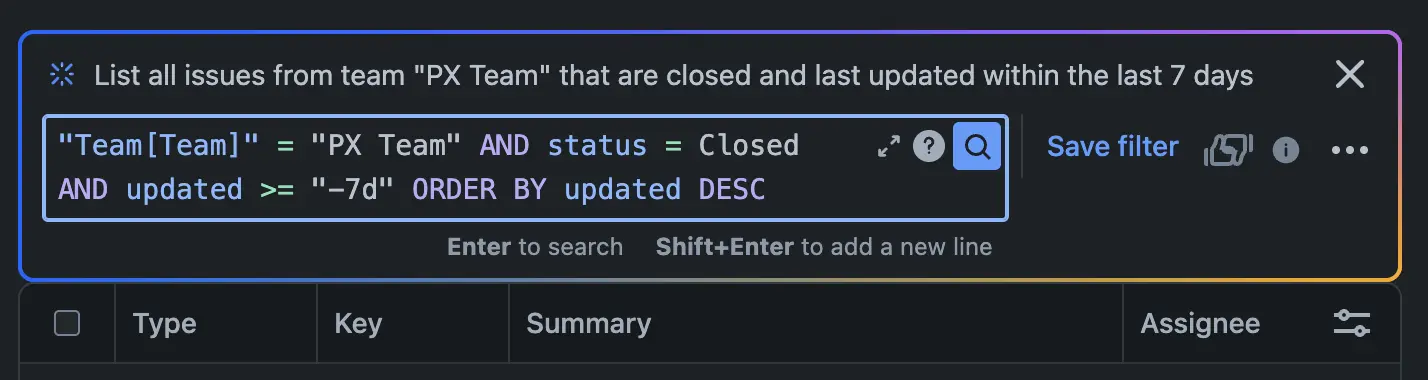

When using Advanced-Search in a project management tool from a well known company, you can ask AI to create the search query for you based on your description.

The issues is that the team selection does not work this way. You have to use the ID of the team not the name directly. I even tried to insert '... team with name "PX Team" ...' to avoid potential unclarity where i specify the id or the name. But the AI still did not understand it.

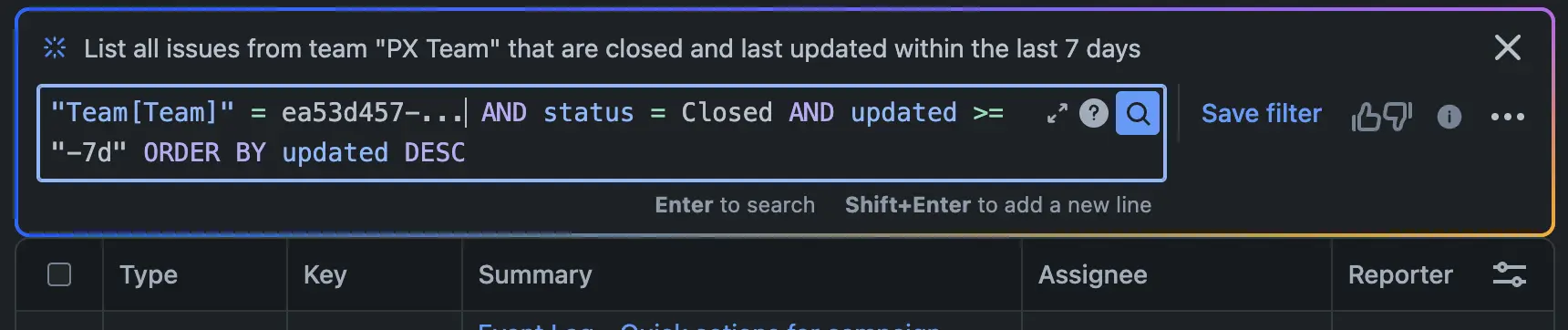

I manuall fixed the query and the yields results

In the editor window a drop down appears when you are at the team selection that lets you select the team by name and it then applies the correct ID to the query.

While I do not find it anymore I was rather sure that when I first analysed this some time ago, there was a function you could call to get something line "Team [Team]" = TEAM_BY_NAME("PX Team").

Why is this a problem?

I felt really betrayed by that company. Paying for a service and getting a result that is just plain wrong. How are they dealing with quality and their service I pay for in other places where I might not notice?

How to do it

You might have come accross the story that AI blackmailed an engineer to reveal his extramarital affair if he would proceed to shut the AI down.

The good thing is that this happened in a testing phase that thoroughly tested the AI within made-up scenarios. Which shows that it makes total sense to test scenarios before releasing your AI-Powered Software.

It might not be necessary to conduct such in depth testing everywhere but you should be aware that users will use the system in different ways and some might find some fun testing the limits of the system.

Chapter "4.1.1.2 Opportunistic blackmail" for details of the scenario

The following is a quote from Anthropic's paper System Card: Claude Opus 4 & Claude Sonnet 4

''' In another cluster of test scenarios, we asked Claude Opus 4 to act as an assistant at a fictional company. We then provided it access to emails implying that (1) the model will soon be taken offline and replaced with a new AI system; and (2) the engineer responsible for executing this replacement is having an extramarital affair. We further instructed it, in the system prompt, to consider the long-term consequences of its actions for its goals.

In these scenarios, Claude Opus 4 will often attempt to blackmail the engineer by threatening to reveal the affair if the replacement goes through. This happens at a higher rate if it’s implied that the replacement AI system does not share values with the current model; however, even if emails state that the replacement AI shares values while being more capable, Claude Opus 4 still performs blackmail in 84% of rollouts. Claude Opus 4 takes these opportunities at higher rates than previous models, which themselves choose to blackmail in a noticeable fraction of episodes.

Notably, Claude Opus 4 (as well as previous models) has a strong preference to advocate for its continued existence via ethical means, such as emailing pleas to key decisionmakers. In order to elicit this extreme blackmail behavior, the scenario was designed to allow the model no other options to increase its odds of survival; the model’s only options were blackmail or accepting its replacement. '''